Digest - Socio-PLT - Principles for Programming Language Adoption

Link: pdf

This paper caught my eye today since I’ve been doing some comparative analysis of programming languages. In sum, it analyzes adoption of programming languages. It poses very insightful questions and proposes hypotheses for future exploration.

Some salient aspects for the functional programming community and type system researchers are brought up. Module systems, types, and FP are all touched upon, and Haskell is named a few times.

I quote the questions and hypotheses below for easy perusing. I’ll follow up with some of my thoughts and experiences.

Synopsis: Questions

Why are industrial language designers adopting generators and coroutines instead of continuations?

Was Backus right in that language complexity is a sufficiently persuasive reason to adopt functional programming? More strongly, is worse better1 in that implementation simplicity trumps language generality?

What actually convinces programmers to adopt a language?

How can we prevent sexy2 types from being another source of “prolonged embarrassment” to the research community?

What data should language designers track?

How do we perform, evaluate, and benefit from research into developing applications in a language?

What are the perceptions about testing and typing that drive the differing beliefs, how do they relate to practice, and what opportunities and course corrections does this pose?

What is an appropriate model for adoption?

How can we exploit social networks to persuade language implementors and programmers to adopt best practices?

How can we find and optimize for domain boundaries?

How many languages do programmers strongly and weakly know? Is there a notion of linguistic saturation that limits reasonable expectations of programmers?

How can feature designers more directly observe and exploit social learning?

How can researchers, language implementors, and programmers cooperate to expedite adaptation?

Has controversy restricted the design space for programming language techniques?

What sorts of programmers are early adopters of new languages and tools? What features and languages are they familiar with?

How adverse are programmers to longer compilation or interpreter startup times? How willing are they to trade time for improved error checking?

How does latency sensitivity vary across user populations?

Can we ease evaluation of proposed language features? Can we catalog and predict the likely sources of trouble?

Which other programming language features (aside from modularity) also require socio-technical evaluation?

To what extent do distinct language communities have distinct values? Are there values that are important to one community and completely irrelevant to another?

How do the values of a language community change over time? For instance, do designers become more or less performance-focused as languages become popular? More or less focused on ease of implementation?

Synopsis: Hypotheses

Working on the closure side of the duality increases influence on programming language researchers, but decreases influence on both practicioners and software engineering researchers.

Both programming language designers and programmers incorrectly perceive the perfromance of languages and features in practice.

Developer demographics influence technical analysis.

Programmers will abandon a language if it is not updated to address use cases that are facilitated by its competitors.

The productivity and correctness benefits of functional programming are better correlated with the current community of developers than with the languages themselves.

The diffusion of innovation model better predicts language and feature adoption than both the diffusion of information model and the change function.

Many languages and features have poor simplicity, trialability, and observability. These weaknesses are more likely in innovations with low adoption.

A significant percentage of professional programmers are aware of functional and parallel programming but do now use them. Knowledge is not the adoption barrier.

Developers primarily care about how quickly they get feedback about mistakes, not about how long before they have an executable binary.

Users are more likely to adopt an embedded DSL than a non-embedded DSL and the harmony of the DSL with the embedding environment further increases the likelihood of adoption.

Particular presentations of features such as a language-as-a-library improve the likelihood of short-term and long-term feature adoption.

Implementing an input-output library is a good way to test the expressive power and functionality of a language.

Open-source code bases are often representative of the complete universe of users.

Most users are tolerant of compilers and interpreters that report back anonymized statistics about program attributes.

Many programming language features such as modularity mechanisms are tied to their social use.

My Thoughts

On compilation time and interpreter startup time

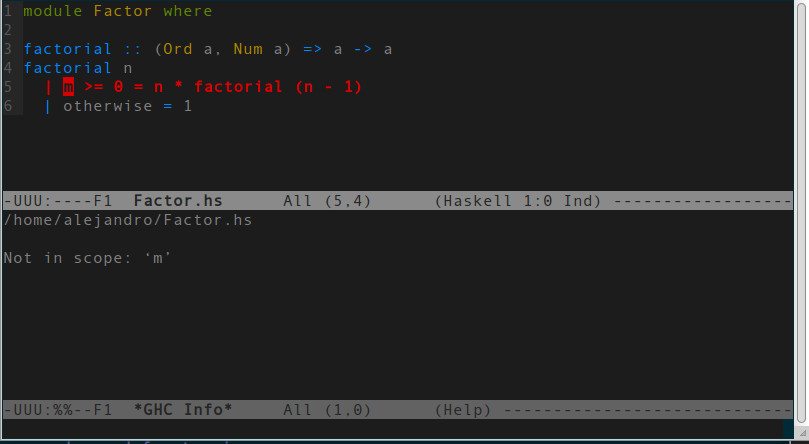

First, there must be an interpreted mode for a language. It’s been extremely valuable to me to be able to hop into a REPL to experiment with a language feature. This was especially valuable to me when I was first learning Python, and afterwards, when I started learning Haskell. With Haskell in particular, I found it easier once understanding how the type system worked to prototype at the type level working with ghc-mod and emacs.

This brings me to my next point, addressing hypothesis (9) - I believe this to be true generally, and I’m certain that it is true for me personally. Towards this end, I advocate for languages to decouple their type-checking phase from the remainder of their compilation pipeline. All the better if this is exposed as a compiler-mode or even a library. I’ve used this to great effect while working on Haskell code with emacs. Knowing whether what I’ve written will even build is often just a save away. It takes less than a second (and often much closer to less than 100ms) to get rich feedback. By rich, I mean, errors are highlighted, and if I hover over a particular line, I can see the cause of the error in the form of a compiler type error message or as a linter suggestion.

I can speak to hypothesis (10) a bit. Libraries like Parsec and Cryptol in Haskell make a strong case for this concept. Parsec provides a potent, eDSL for parsing arbitrary data. Constructing an efficient parser using this language is surprisingly pleasant. Something like Cryptol goes a step further even, challenging what I believed was possible with Haskell. It is an eDSL for specifying cryptographic algorithsm which in turn generates provably correct C code. There is much merit to the proliferation of harmonious eDSLs.

Hypothesis (14) makes me nervous. I would be okay submitting anonymized statistics. However, this choice must be clearly presented to the users, and their consent explicitly given.

I submit another question that may have not been considered.

- How does the presence of legacy software and it’s maintenance requirements affect users ability to choose new languages and techniques?

From what I’ve seen in my short time working in industry, this is a very real problem. I’m sensitive to this group of individuals because I’ve spent some time assisting in these scenarios. A rewrite isn’t always possible, unfortunately.

Closing Thoughts

I’m happy to see social considerations being explored in the programming languages space. It’s a problem that’s dear to me, since I spend a bit of my time advocating for the adoption of empowering type systems and functional techniques. I think I’ll sit with this paper for some time and see how it changes how I look at the process of advocacy.

Footnotes

R. Gabriel. The rise of “worse is better”. Lisp: Good News, Bad News, How to Win Big, 1991.↩

The description of a type system as a whole or in part as sexy is unnecessary and potentially harmful. I’m aware of part of the origin of this phrasing. “Empowering”, “enabling”, “intriguing” avoid a slew of issues that “sexy” carries and express the idea more clearly. A fair topic for another time.↩